Decision-makers in data-driven firms, whether they are marketing managers or C-level executives, demand figures and strong proof that a proposed change will succeed. That's why A/B testing has grown in popularity and is now regularly conducted before any changes to the functionality or look of a landing page, internet application, website design, or even an email campaign is implemented.

There is a learning curve to consider when starting out with A/B tests. Expect to become an A/B testing expert after only a few tests but it turns out that you'll almost certainly make hundreds of errors along the road, and your A/B tests will fail or produce incorrect results! Don’t worry as we have you covered with some most typical blunders in A/B testing in this post. We'll also go over how to avoid them if you're going to run the first A/B test.

1. Statistics are being overlooked

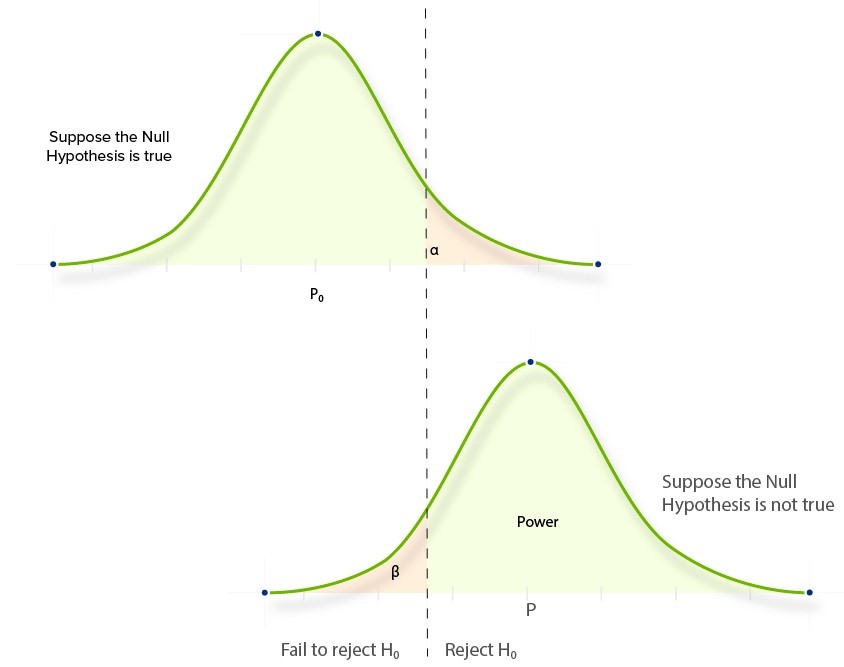

Statistical significance indicates the possibility that the difference between the control and test versions of your experiment is due to chance or mistake.

If you don't pay attention to statistical significance and instead depend on your gut instinct rather than mathematics, you'll probably finish your test too soon, before statistically significant data are generated. This also implies that you will receive incorrect results.

A/B test

Statistical significance is determined by a straightforward computation that does not require a degree in economics or science. There are several free statistical significance calculators available on the Internet to assist you in estimating what is required to achieve it.

The following are some of the variables that such calculators consider:

Visitor count in the control version

In a test version, the number of visits

In a control version, the number of conversions

In a test version, the number of conversions

2. Many things to test

It's tempting to test many page components at once, such as email personalization, emoticons, company names in email subject content, and more.

One of the reasons individuals opt to test many items at once is to save time on lengthy testing. However, if you use this method, you might not be able to tell what worked and what didn't in your trial. You can enhance your email deliverability, open rate, and conversions without understanding what adjustment made an impact on those metrics using the example above.

Multivariate testing

If you need to test more than one element on a page, use multivariate testing and only test different test versions on websites with a good amount of traffic. You may use this strategy to evaluate new pictures, title tag performance, and sign-up form length – all while running separate test versions.

The main takeaway is to avoid performing several split tests at the same time. Consider employing multivariate testing instead of split tests. Only high-traffic websites will allow you to test many items at once.

3. Wrong hypothesis

When starting A/B testing, start by establishing a hypothesis that specifies a problem and a solution, as well as essential success criteria to quantify outcomes. Your hypothesis can't be founded on chance; else, an A/B test would be useless.

Hypothesis

A hypothesis is a proposed solution or explanation for a situation. For example, you may notice that your consumers do not trust your website since it does not appear to them to be a trustworthy business, and as a result, they do not purchase. Your hypothesis should be based on the evidence you gather from users. The purpose of your test should be to solve a specific problem that consumers are experiencing. Problems are frequently discovered through monitoring or speaking with users.

Remember that a hypothesis requires you to measure your outcomes. That's why picking the correct success measures to track before and after the test is crucial.

4. Testing meaningless things

There are obvious facts and facts that are well-known. Is it necessary to test them a hundred times to establish that they work? They should not do so since it is a waste of time.

Consider enrolling your SaaS consumers in a trial version of a premium account rather than a freemium account. If you modify a button URL to sign up people for the premium version and conceal a reference about a permanently free account (on which customers can stay without paying after their trial finishes), there is nothing to test here. Users will be forced to upgrade to a premium version without their consent.

Testing meaningless things

5. Traffic

For tiny websites with limited traffic, A/B testing is not the best tool to utilize. Setting up trials on a website with 200 monthly visits and only a few conversions would take months, if not years, to attain statistical significance and be positive that the test findings are accurate and not due to chance or error.

Traffic A/B test

Before launching a normal test, you should assess the amount of traffic required. Otherwise, doing A/B tests will be a waste of time and money because of the setup and observation. In this case, you might wish to concentrate on increasing website traffic using Google Ads and other comparable channels, so that your tests can reach statistical significance more quickly.

A/B testing might fail for a variety of reasons. There are several areas where you might make mistakes if you are just getting started with this testing procedure. Making errors early on might be discouraging and lead to you abandoning A/B testing before you see the first results.